About ChronOS Linux

From ChronOS Linux

(→Background) |

m (→Scheduling Real-Time Tasks) |

||

| (2 intermediate revisions not shown) | |||

| Line 43: | Line 43: | ||

A real-time application in ChronOS needs to specify the start and end of a real-time segment. A real-time segment is defined as a portion of the thread, which needs to be executed with real-time time constraints. This can be done using the following system calls provided by ChronOS. | A real-time application in ChronOS needs to specify the start and end of a real-time segment. A real-time segment is defined as a portion of the thread, which needs to be executed with real-time time constraints. This can be done using the following system calls provided by ChronOS. | ||

| - | ;<tt> | + | ;<tt>begin_rt_seg()</tt> |

| - | :This system call is used to indicate the start of a real-time scheduling segment and | + | :This system call is used to indicate the start of a real-time scheduling segment and also to provide the real-time timing constraints for a given task. |

| - | also to provide the real-time timing constraints for a given task. | + | ;<tt>end_rt_seg()</tt> |

| - | ;<tt> | + | |

:This system call is used to indicate the end of a real-time scheduling segment. | :This system call is used to indicate the end of a real-time scheduling segment. | ||

| - | ;<tt> | + | ;<tt>set_scheduler()</tt> |

:This system call is used to enable a scheduling algorithm. | :This system call is used to enable a scheduling algorithm. | ||

| Line 60: | Line 59: | ||

: When a task requests for a resource, ChronOS tags the task as RESOURCE REQUESTED and invokes the scheduler. This is done to let the scheduling algorithm look at the dependency chain based on the resource requested and pick the task that is best suited for execution. | : When a task requests for a resource, ChronOS tags the task as RESOURCE REQUESTED and invokes the scheduler. This is done to let the scheduling algorithm look at the dependency chain based on the resource requested and pick the task that is best suited for execution. | ||

;'''A resource being released''' | ;'''A resource being released''' | ||

| - | :When a task releases a resource, ChronOS invokes the scheduler in order to allow a new task to be picked which might be blocking on the resource that was just released. The decision to choose the new task is done by the scheduling algorithm. Using the | + | :When a task releases a resource, ChronOS invokes the scheduler in order to allow a new task to be picked which might be blocking on the resource that was just released. The decision to choose the new task is done by the scheduling algorithm. Using the set_scheduler() system call, a scheduling algorithm can be selected. |

== Single-processor Scheduling == | == Single-processor Scheduling == | ||

Current revision as of 01:44, 21 December 2011

Contents |

Background

ChronOS is derived from the 2.6.33.9 version of the Linux kernel and uses the CONFIG_PREEMPT_RT real-time patch which enables complete preemption in Linux and improves interrupt latencies. The patch is released under GPLv2 and makes it suitable for academic research. ChronOS provides a set of APIs and a scheduler plugin infrastructure that can be used to implement and evaluate a variety of single- and multi-processor scheduling algorithms.

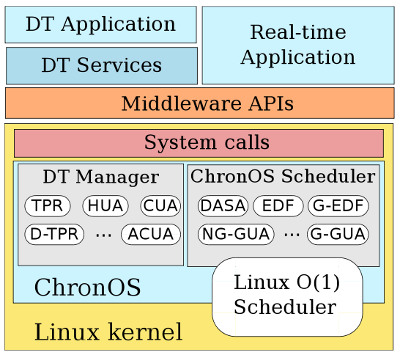

ChronOS Architecture

Figure 1 shows the overall architecture of ChronOS real-time Linux. ChronOS is derived from the Linux kernel and we rely on all the kernel primitives for basic operating system objectives such as process and memory management, timers, interrupts, drivers, file-system, and networking. As shown in figure, Linux provides the basic foundation of ChronOS. We extend the Linux O(1) scheduler and implement the ChronOS real-time scheduler where various single-processor scheduling algorithms, such as EDF, DASA, LBESA, HVDF, RMA and multiprocessor algorithms, such as G-EDF, G-NP-EDF, G-FIFO, NG-GUA, G-GUA have been implemented.

CONFIG_PREEMPT_RT Patch

The stock Linux kernel provides soft real-time capabilities, such as the POSIX primitives and the ability to set priorities. The kernel provides two real-time scheduling policies - SCHED FIFO and SCHED RR and a “nice” based scheduling policy called SCHED NORMAL. In SCHED FIFO processes are given CPU for as long as they want, until the arrival of a higher priority task. This is primarily a POSIX-specified feature. SCHED RR, on the other hand, schedules processes of the same priority in a round robin fashion. This is done while favoring higher priority tasks. The ChronOS real-time scheduler uses the SCHED FIFO as the underlying base scheduling algorithm and builds up the scheduling framework on that. The scheduler is described in detail in the subsequent sections.

In order to bring in real-time semantics to the kernel, it is required to have a preemptable kernel. The stock Linux kernel does not allow “complete” kernel preemption. However, in order to implement scheduling algorithms such as G-EDF or G-GUA, it is necessary to be able to preempt the kernel. To achieve this we use the PREEMPT RT patch. The patch enables complete kernel preemption along with a generic clock event layer with high resolution support, thus providing hard real-time capabilities in the Linux kernel. With the PREEMPT RT patch most parts of the kernel, except for a few small regions (which are inherently unpreemptible, such as the task scheduler), can be preempted. In order to achieve complete preemption the following changes were made to the Linux kernel.

- All the in-kernel locking primitives, such as spinlocks have been re-implemented using rtmutexes. As a result, all critical sections that are protected with spinlock tor rwlock t are preemptable. However, the patch still enables the creation of non-preemptable sections in the kernel, if that is required.

- Priority Inheritance has been implemented for all in-kernel spinlocks and semaphores.

- All interrupt handlers in the kernel have been converted into kernel threads. All the soft interrupt handlers are treated in the kernel thread context (i.e., they have a struct task struct associated) such that they can be treated as threads with higher priority and scheduled accordingly. However, it is still possible to register an IRQ in the kernel context.

- The existing Linux timer APIs have been converted into separate infrastructure for high resolution kernel timers, including those for timeouts, which enable the use of user-space POSIX timers with high resolution.

Overall, the PREEMPT RT patch improves the interrupt latencies and provides a completely preemptable kernel.

ChronOS Real-Time Scheduler

Since version 2.6, the Linux kernel features an 0(1) scheduler. Every scheduling algo rithm provided in the default Linux kernel (SCHED NORMAL, SCHED FIFO, SCHED RR) completes in constant-time, regardless of the number of processes in the system that are in the running state. The O(1) scheduler also implements SMP scalability where each processor has its own locking and individual run-queues. The scheduler also implements SMP affinity which enables processes to be assigned to a specific CPU.

Priority Bit-map and Run-queues

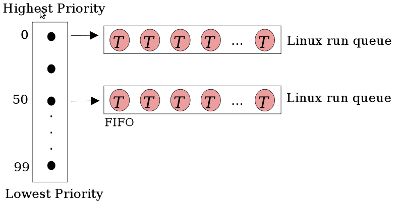

In order to achieve an O(1) scheduler, Linux implements a bit-map for each priority. There are 140 priority levels. [0 . . . 99] are referred to as real-time priorities while [100 . . . 140] are called “nice” priorities. In the kernel-space, “0” is the highest real-time priority while “99” is the least (which is opposite to that in the user-space). Figure 2 shows the section of the priority bit-map which maps to the real-time priorities inside the kernel. As shown in the figure, each priority has a run-queue of active tasks on the system. The default scheduling algorithm used in Linux is SCHED NORMAL in which the amount of CPU that each process consumes, and the latency that it will get, is determined by the “nice” values, which are calculated by the kernel over time in an interactive fashion looking at the consumption patterns of processes in the system. The kernel starts from the highest priority bit in the bit-map, looks for tasks at that priority level and executes them before going to the next level. The key idea is to give preference to higher priority tasks.

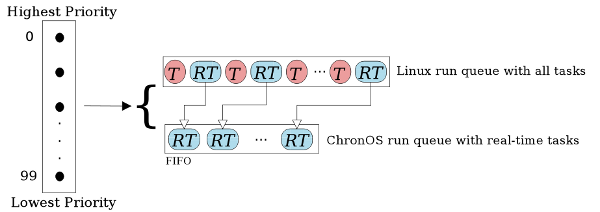

The ChronOS scheduler extends the Linux O(1) scheduler. However, to differentiate between normal tasks and real-time tasks created by the ChronOS middle-ware, we add additional parameters to the struct task struct to specify the real-time properties of a task, such as the task’s worst case execution cost, deadline, period and TUFs. The ChronOS real-time tasks are tagged so that they stand out in the run-queue.

In order to facilitate working on the real-time tasks, for every priority level in the bit-map we create another queue called the ChronOS real-time run-queue (CRT-RQ) which holds a reference to the real-time tasks in the Linux run-queue. This is illustrated in Fig 3. As shown in the figure, the tasks in the CRT-RQ are references of the real-time tasks in the default Linux run-queue. The working of the CRT-RQ is similar to the Linux run-queue. When a task enters the system, it is added to the run-queue corresponding to its priority. If the new task is tagged as a ChronOS real-time task, a reference to the task is also added in the CRT-RQ for the specific priority level. When the ChronOS scheduler is enabled, it looks at the CRT-RQ, orders the run-queue based on the scheduling algorithm selected, and picks up the first task at the head of CRT-RQ. When the ChronOS scheduler picks up a real-time task, it is removed from both the default Linux run-queue and the CRT-RQ.

Scheduling Real-Time Tasks

A real-time application in ChronOS needs to specify the start and end of a real-time segment. A real-time segment is defined as a portion of the thread, which needs to be executed with real-time time constraints. This can be done using the following system calls provided by ChronOS.

- begin_rt_seg()

- This system call is used to indicate the start of a real-time scheduling segment and also to provide the real-time timing constraints for a given task.

- end_rt_seg()

- This system call is used to indicate the end of a real-time scheduling segment.

- set_scheduler()

- This system call is used to enable a scheduling algorithm.

The real-time scheduler is invoked at various scheduling events. A scheduling event is defined as a trigger that forces the system into a scheduling cycle resulting in a call to the scheduler where a new task is picked based on the scheduling algorithms. In ChronOS we define the following scheduling events.

- A task entering the system

- When a new task is added to the system, the scheduler is invoked. At that time, the scheduling algorithm looks at the CRT-RQ, orders the queue and picks the task at the head of the queue for scheduling.

- A task leaving the system

- When a task finishes its scheduling segment and leaves the system, the scheduler is invoked. The scheduling algorithm looks at the CRT-RQ, orders the queues and picks the task at the head of the queue for scheduling.

- A resource being requested

- When a task requests for a resource, ChronOS tags the task as RESOURCE REQUESTED and invokes the scheduler. This is done to let the scheduling algorithm look at the dependency chain based on the resource requested and pick the task that is best suited for execution.

- A resource being released

- When a task releases a resource, ChronOS invokes the scheduler in order to allow a new task to be picked which might be blocking on the resource that was just released. The decision to choose the new task is done by the scheduling algorithm. Using the set_scheduler() system call, a scheduling algorithm can be selected.

Single-processor Scheduling

All scheduling algorithms are created as Linux modules in ChronOS which provides the flexibility to add or remove any scheduling algorithm from a running kernel without restarting the system. The scheduling algorithms are implemented in a modular fashion using a set of functions that we refer to as the “scheduler plugin”. Once a scheduler is selected for a set of processors, all the real-time tasks that are added to the system on those processors are scheduled using the the selected scheduling algorithm. The scheduler plugin is described in detail in the subsequent section.

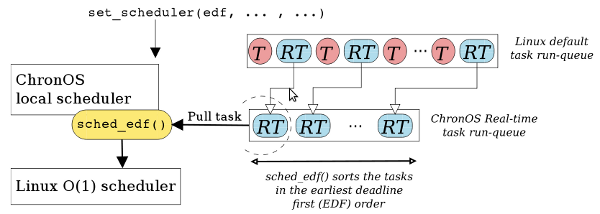

Figure 4 illustrates an example of scheduling on a single processor machine. As the scheduling algorithms are written as modules in ChronOS, they can be loaded into a running kernel using modprobe, which registers the scheduling algorithms, adding them to the list of available schedulers in ChronOS. In the figure, the call to set scheduler() system call is made from the real-time application to select EDF as the real-time scheduler. ChronOS checks if EDF kernel module is available. If the scheduler is found, ChronOS loads the plugin and makes it the default ChronOS local scheduler for running real-time tasks. All the real-time tasks are now added to the CRT-RQ. At every scheduling event, the ChronOS scheduler invokes sched edf(), which sorts the CRT-RQ in Earliest Deadline First order. The head of the queue now represents the earliest deadline task which is pulled by the ChronOS local scheduler and given to the Linux O(1) scheduler for execution.

Multiprocessor Scheduling

Scheduling on multiprocessors can be mainly categorized into two forms – partitioned scheduling and global scheduling. ChronOS supports both these variants. In this section we describe the details of both these architectures, and discuss their design and implementation.

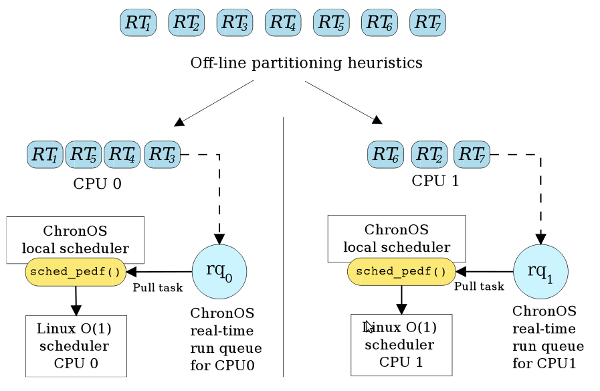

Partitioned Scheduling

Partitioned scheduling can be described as uniprocessor scheduling done on multiprocessors. The key idea of partitioned scheduling is to divide the task-set using an off-line heuristic, as partitioning a set of tasks on M processors has been shown to be equivalent to the bin-packing problem and hence NP-hard in the strong sense.

Figure 5 illustrates the partitioned scheduling approach used in ChronOS. The task-set is partitioned off-line using polynomial-time heuristics such as first-fit, worst-fit, and best-fit. Fig 5 shows a two processor system. The heuristic divides the task-set into two processor bins as shown. Once all the tasks have been divided, the real-time application sets the affinity of each of the tasks to the processors they have been assigned to. This is done to ensure that the tasks are added to the run-queue of their respective assigned processors. The reference to these real-time tasks is also added to the CRT-RQ of their respective assigned processors. As partitioned scheduling is an extension of uniprocessor scheduling, we set the partitioned scheduler as the local ChronOS scheduler on all processors using the set scheduler() system call. Each processor runs its scheduling algorithm independently. At every scheduling event, the processor enters its local scheduler, looks at the local run queue, and using the selected scheduling algorithm (in the figure shown as P-EDF), picks the next task to be executed. As the tasks have already been partitioned, we disable Linux’s load balancing mechanism to prevent tasks from being migrated between processors. Some of the algorithms that have been implemented using this approach in ChronOS are P-EDF and P-DASA.

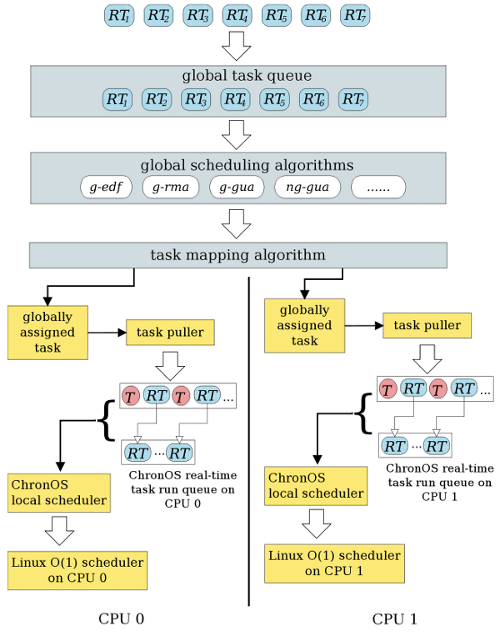

Global Scheduling

Most of the multiprocessor scheduling algorithms, such as G-EDF, G-NP-EDF, Pfair, gMUA, G-GUA, and NG-GUA are based on global scheduling. The main idea behind global scheduling is that the tasks are assigned to a global queue instead of individual local queues. The scheduling algorithm on each processor looks at the global queue and either makes a scheduling decision for itself and every other processor in the system (such as G-EDF, Pfair, G-GUA, NG-GUA) or picks a task only for itself (such as G-NP-EDF).

Figure 6 illustrates the global scheduling approach used in ChronOS. In order to implement global scheduling inside ChronOS, we create another level of scheduling abstraction. At the top we have the “global scheduler” which looks at the “global task queue”. The global scheduler maps to a “local scheduler” on individual processors which extends from the Linux O(1) scheduler. The global scheduler (invoked on a processor) can either pick a task for itself or decide for all the processors on the system (depending on the scheduling policy used). If the global scheduler needs to choose tasks for all available processors on the system (such as G-EDF, G-GUA or NG-GUA), it picks the top M tasks. These tasks are given to the task mapping algorithm which maps these tasks on M underlying processors. The tasks assigned by the task mapping algorithm are pushed into the “globally assigned task” block from where the “task puller” on each CPU picks up the task and moves it to the head of its local queue. The default local scheduling algorithm for global scheduling algorithms on each processor is SCHED FIFO, which picks the head of the CRT-RQ queue and gives the task to the Linux O(1) scheduler for execution. In global scheduling under ChronOS, tasks can be created and assigned to any processor. The tasks continue to reside on the Linux run-queue of the processor they were created on. The global queue has a reference to all the real-time tasks from all the processors. As mentioned earlier, there are two ways in which global scheduling can be achieved. In the first approach, the global scheduler picks a task for itself from the global queue, such as G-NP-EDF. We refer to this as the “Application Concurrent Scheduling Model”. In the second approach, the global scheduler picks the tasks for all M available processors, such as Pfair, NG-GUA, G-GUA. We refer to this as the “Stop-the-World Scheduling Model” (STW).

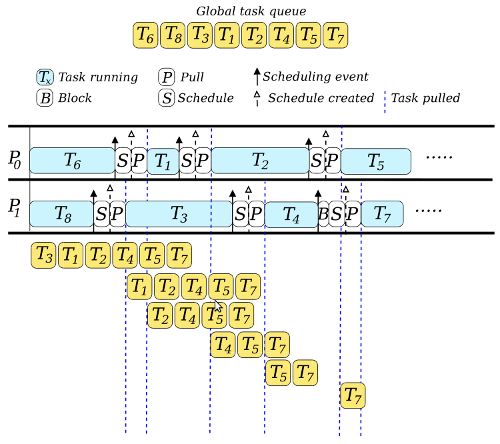

Application Concurrent Scheduling Model

Figure 7 illustrates the application concurrent scheduling model for global scheduling in ChronOS. For the sake of explanation of the model, we will assume that the scheduling algorithm picks the first task that is at the head of the queue. At the beginning, task T6 is running on processor P0 and task T8 is running on processor P1 . As shown in the figure, T8 finishes before T6 . However, T6 is not preempted on P0 . It continues to run. After T8 finishes, it generates a scheduling event. P1 enters the global scheduler, picks the first task T3 from the global queue and assigns it to the “globally assigned task” block. The local scheduler on P1 pulls the task T3 and starts executing it. While P1 is pulling the task, T6 finishes on processor P0 and generates a scheduling event. It pulls T1 from the global queue and starts executing it without preempting T3 on P1 . The same procedure is repeated for other scheduling events. There might be a scenario when both processors finish their tasks at the same time. As shown in the figure, when T2 finishes on P0 , T4 finishes on P1 around the same time. As the global task queue is common between all the processors, while P0 enters the global scheduler, P1 blocks. The moment P0 is done with the schedule, P1 unblocks and enters the scheduler. The G-NP-EDF and G-FIFO are some of the algorithms that use such an architecture model.

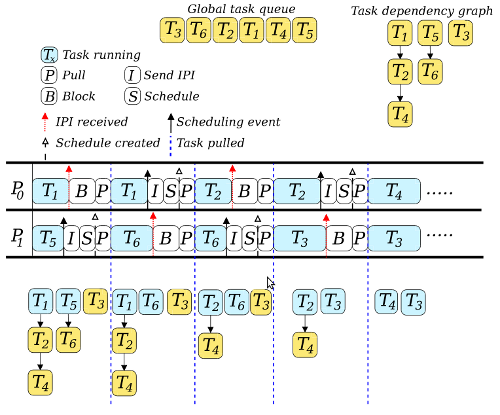

Stop-the-World Scheduling Model

In order to allow global scheduling algorithms with resource management, ChronOS imple ments the Stop-the-World scheduling model. Figure 8 provides an illustration of the model. For the sake of explanation of the model we will assume that the scheduling algorithm selects the tasks for execution that are not dependent on any other tasks in the system. Let us assume that the global task queue has the tasks eligible for the final schedule. The figure shows the dependency relation of the tasks in the global task queue with each other. Task T4 needs a resource which is owned by task T2 which in turn requires a resource that is being held by task T1 . In a similar fashion, task T6 needs a resource which is owned by task T5 . Task T3 , on the other hand, does not have any dependents. Let us assume that the scheduling algorithm considers the tasks that have the maximum dependents as the most eligible tasks for the final schedule.

Given all the assumptions, Figure 8 shows that tasks T1 and T5 are the current executing tasks on processors P0 and P1 , respectively. Task T5 finishes first and generates a scheduling event. In the Stop-the-World model, once a scheduling event is generated, the schedule needs to be created for all the processors. This requires a processor to be able to force a scheduling event on all other processors. When task T5 triggers the scheduling event, processor P1 sends an “Inter-processor Interrupt” (IPI) to all the processors on the system. In Linux, at the end of every interrupt handler, the call to the scheduler is made. This is done in order to pick up the next task for execution after the interrupt has been handled. The IPI used by the scheduler is a dummy interrupt. The interrupt handler for the scheduling IPI does not do anything but call the schedule() function at the end, which forces the processor to enter the scheduler.

In the example shown in Figure 8, processor P1 sends an IPI to all the available processors on the system. After sending the IPI, processor P1 enters its global scheduler and looks at the available tasks in the run-queue to create the schedule. In the meanwhile, processor P0 receives the IPI and is forced into the scheduler. However, as processor P1 is already in the global scheduler, processor P0 blocks. The global scheduler on processor P1 picks two eligible tasks (assuming a two-processor system) and hands these tasks to the mapping algorithm. The task mapper pushes these tasks into the “globally assigned task” block of each processor. Once the mapper is done, each individual processor pulls its globally assigned task to the head of their local queue. The local scheduler (which is SCHED FIFO) on each processor, picks the task at the head of the local queue and gives it to the Linux O(1) scheduler for execution. Even if the tasks do not have a dependency relationship, the Stop-the-World model works the same way as mentioned above. The scheduling algorithm looks at the global queue and picks the M tasks that are eligible for final schedule and hands them to the mapping algorithm, which is explained in detail in the next section.

Mapping Tasks to Processors

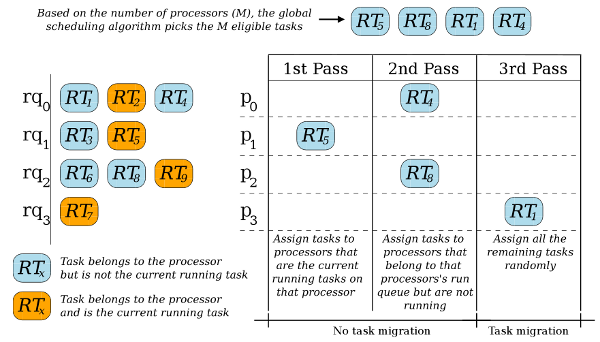

Fig 9 illustrates the default mapping algorithm used in ChronOS for global scheduling. The job of the mapping algorithm is to take the M most eligible tasks that have been selected by the scheduling algorithm and map them to the M available processors on the system. The key idea is to be able to reduce task migrations, thus preserving cache coherence. The algorithm shown in Fig 9 is selected as the default for all global scheduling algorithms. However, the scheduler plugin infrastructure of ChronOS allows users to override the default mapping algorithm and provide their own implementation.

In ChronOS the mapping is done using a three-pass algorithm. In Fig 9, tasks RT5, RT8, RT1 and RT4 represent four real-time tasks that have been selected by the global scheduling algorithm at the end of a scheduling event. In order to understand the mapping algorithm we give a snapshot of the per-processor run-queues. Each run-queue shows the tasks that belong to the individual processors. We also highlight the current running task on each of the processors before the scheduling event was triggered, which led to the creation of the new global schedule. Task RT2 is the current running task on processor P0, task RT5 on P1, task RT9 on P3 while task RT7 is the current running task on P3.

In the first pass, the algorithm goes over the final schedule and maps tasks to processors that are the current running tasks on that processor. This provides cache coherence. As shown in Fig 9, task RT5 is the current running task on P1 . Hence, it is mapped to processor P1 . In the second pass, the algorithm goes over the final schedule and maps tasks to processors that belong to that processor’s run-queue. This prevents tasks from being unnecessarily migrated. As shown in the figure, task RT4 belongs to processor P0 and hence it is mapped to processor P0 . In the similar fashion, task RT8 belongs to processor P2 and it gets mapped to the same processor. In the last pass, the mapping algorithm randomly assigns the leftover tasks to the remaining processor(s). As shown in the figure, task RT1 is assigned to processor P3 . However, task RT1 actually belongs to processor P0 . As a result, this step results in the migration of the mapped tasks. The key idea of the mapping algorithm is to reduce task migrations. However, if the final schedule consists of M tasks that all belong to the same processor, the worst case migration cost is M − 1 task migrations. In such a case, cache-aware scheduling algorithms can be used to create a cache conscious schedule, and thus handle their own mapping, overriding the default mapping algorithm.

Back to main page